What's New

Tiny Robots Carry Stem Cells Through a Mouse

Using this technique, microrobots could deliver stem cells to hard-to-reach places

By Emily Waltz

Images: DGIST-ETH Microrobotics Research Center

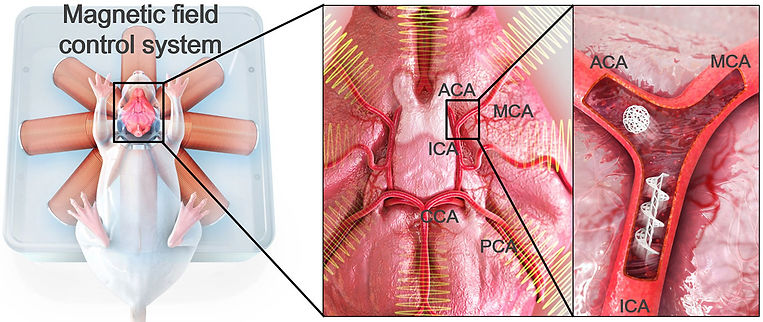

This illustration shows how microrobots could move stem cells in the body of a mouse. The panel on the far right shows a helical robot, controlled by external magnetic fields, as it travels through a blood vessel in the mouse's face.

Engineers have built microrobots to perform all sorts of tasks in the body, and can now add to that list another key skill: delivering stem cells. In a paperpublished today in Science Robotics, researchers describe propelling a magnetically-controlled, stem-cell-carrying bot through a live mouse.

Under a rotating magnetic field, the microrobots moved with rolling and corkscrew-style locomotion. The researchers, led by Hongsoo Choi and his team at the Daegu Gyeongbuk Institute of Science & Technology (DGIST), in South Korea, also demonstrated their bot’s moves in slices of mouse brain, in blood vessels isolated from rat brains, and in a multi-organ-on-a chip.

The invention provides an alternative way to deliver stem cells, which are increasingly important in medicine. Such cells can be coaxed into becoming nearly any kind of cell, making them great candidates for treating neurodegenerative disorders such as Alzheimer’s.

But delivering stem cells typically requires an injection with a needle, which lowers the survival rate of the stem cells, and limits their reach in the body. Microrobots, however, have the potential to deliver stem cells to precise, hard-to-reach areas, with less damage to surrounding tissue, and better survival rates, says Jin-young Kim, a principle investigator at DGIST-ETH Microrobotics Research Center, and an author on the paper.

The virtues of microrobots have inspired several research groups to propose and test different designs in simple conditions, such as microfluidic channels and other static environments. A group out of Hong Kong last year described a burr-shaped bot that carried cells through live, transparent zebrafish.

Holding an egg is a lot different from holding an apple or a tomato, and humans are naturally able to adjust their grip to avoid crushing or dropping each object.

Artificial hands installed on prosthetic limbs and robots don't have that natural ability—yet.

An inexpensive, sensor-laden glove could lay the groundwork for advanced prosthetic hands that are better able to grasp and manipulate day-to-day objects, Massachusetts Institute of Technology researchers report.

The glove costs just $10 but contains a network of 548 sensors that gather detailed information about objects being manipulated by the hand inside the glove, they said.

Using the glove, the researchers are creating "tactile maps" that could be used to improve the dexterity of artificial hands, said lead scientist Subramanian Sundaram, a postdoctoral researcher at MIT.

"You can look at these tactile maps and begin to understand what kind of objects you're interacting with. We also show you can use these tactile maps to estimate the weight of the object you're carrying," Sundaram said. "We can clearly unravel or quantify how the different regions of the hand come together to perform a grasping task."

Sundaram and his colleagues developed the glove to help improve the fine motor skills of robot hands.

"This has really been a grand challenge in all of robotics—how do you build dexterous robots, robots that can manipulate objects much in the way humans do?" Sundaram said.

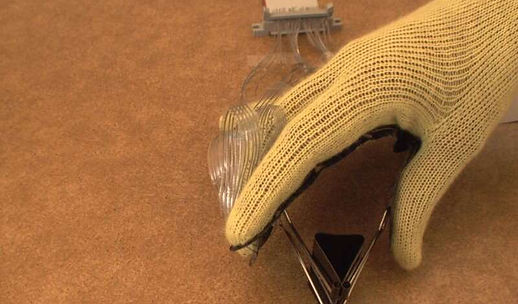

Their solution involves a simple knitted glove that has a force-sensitive film applied along the touching surfaces of the palm and fingers, feeding data to hundreds of sensors.

Researchers wearing the glove interacted with a set of 26 different objects with a single hand for more than five hours, creating a huge amount of tactile data.

They then fed the data into a computer, training it to identify each object from the way it was held.

"We can look at a collective set of interactions and we can say with generality how different regions of the hand are used together," Sundaram said. "You can understand how likely you are to use each region in combination with the others to grasp an object."

Using this data, robots fitted with similar sensors could be taught to handle and manipulate fine objects without squeezing them too hard or handling them with butterfingers, Sundaram said.